Apache Ranger's plugins run within the same process as the component, e.g. No, Apache Ranger is not a Single Point of Failure. Thus there is no additional OS level process to manage. These security policies are enforced within Hadoop ecosystem using lightweight Ranger Java plugins, which run as part of the same process as the namenode (HDFS), Hive2Server(Hive), HBase server (Hbase), Nimbus server (Storm) and Knox server (Knox) respectively. Authorized users will be able to manage their security policies using the web tool or using REST APIs. How does it work over Hadoop and related componentsĪpache Ranger at the core has a centralized web application, which consists of the policy administration, audit and reporting modules.

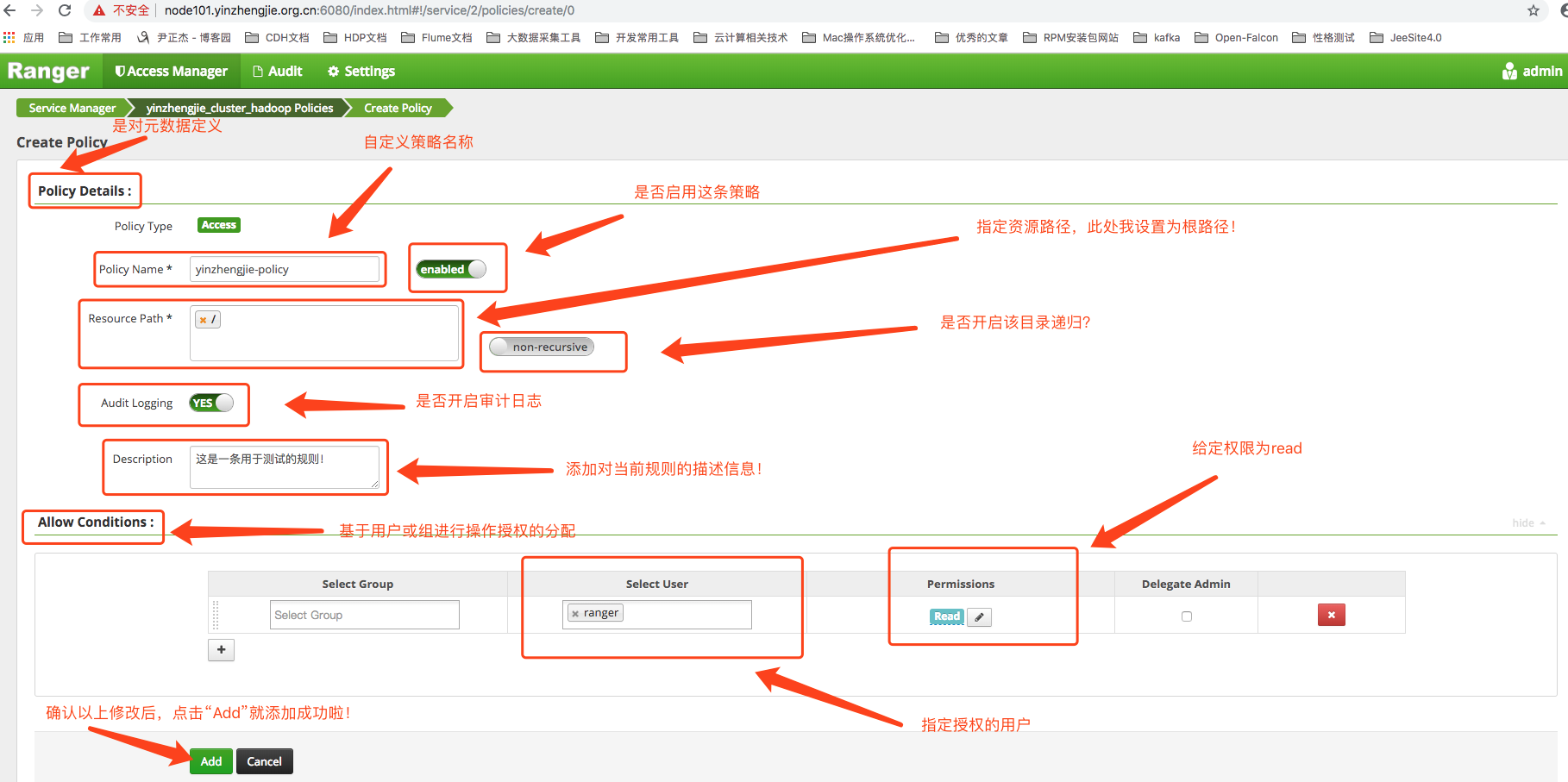

What projects does Apache Ranger support todayĪpache Ranger supports fine grained authorization and auditing for following Apache projects: Apache Ranger also provides ability to delegate administration of certain data to other group owners, with an aim of decentralizing data ownership They also can enable audit tracking and policy analytics for deeper control of the environment. Using the Apache Ranger administration console, users can easily manage policies around accessing a resource (file, folder, database, table, column etc) for a particular set of users and/or groups, and enforce the policies within Hadoop. General What does Apache Ranger offer for Apache Hadoop and related components?Īpache Ranger offers a centralized security framework to manage fine grained access control over Hadoop and related components (Apache Hive, HBase etc.).

0 kommentar(er)

0 kommentar(er)